If you are reading this, you are probably in agreement that the Open Networking revolution is real! I recently attended the OCP Global Summit and the Linux Foundation ONE Summit, and there was indeed electricity in the air. The size of the community has grown dramatically, and it attracts new users and vendors every day.

At Hedgehog, we’re building a network fabric using a fully open-source NOS (SONiC) for the modern cloud-native workloads. When I talk to people about what is happening in the SONiC ecosystem, the most common response is “Isn’t this what Cumulus Networks tried to do?”

You see, “Open Networking” is not new. For anyone who has been around this segment of the networking industry, you are likely familiar with the rise of a company named Cumulus Networks.

Enter the Rocket Turtle

Cumulus had a quite exciting rise to stardom, but failed to create what the industry needed, A REAL REVOLUTION.

Cumulus based their entire company strategy on the idea that all server infrastructure had already moved from proprietary operating systems to Linux. Server admins now had tools (Ansible/Chef/Puppet) that allowed them to manage thousands of devices, yet network operators were stuck with some clunky CLI-based stone-age toolsets, and as a result they couldn’t scale their expertise to manage more devices. So Cumulus set out to create a linux-based Network Operating System (NOS) based on a generic distribution (Debian) that could run on white box hardware.

White box switching was also somewhat new at that time. The largest customers in the world (mostly hyperscalers) had been buying commodity switches from Original Device Manufacturers (ODMs) that contained Broadcom, Marvell, or Mellanox ASICs. Since Cisco was at that time using Broadcom Trident 2 in many of their products, Cumulus could promise the same level of throughput provided they got the software to properly program the ASIC hardware.

Well, that was the plan anyway. There was a LOT of excitement about this concept. Network administrators were full of complaints at the time, from costly licensing, to insanely priced proprietary SFP optics that were identical to what customers could buy for 1/10th of the price, to box “churn” (EOL one platform once a new one has been released). One well known analysts even went so far as to mention that Cumulus and white box switching were an existential threat to major box vendors. That prediction was based on customers being willing to give up their CLIs for a linux bash prompt.

Well, Cumulus took off, and indeed acquired a lot of customers, but it was incredibly hard to get each customer. You see, many vendors had done some interesting sales tactics in 2000-2010. One of these tactics was a marketing and sales campaign named “Turn It On”. Basically, SEs were instructed to get customers to turn on every single feature that they possibly could, as this would create lock-in for future sales. A config for a device could not be easily converted to a different vendor syntax because several of the features didn’t even exist for the second vendor. Some of these features were indeed cool, some of them were just extra baggage. It didn’t matter much to customers that their configs were becoming more and more proprietary. This initiative worked and many customers at that time had RFP/RFQs that listed proprietary features as requirements.

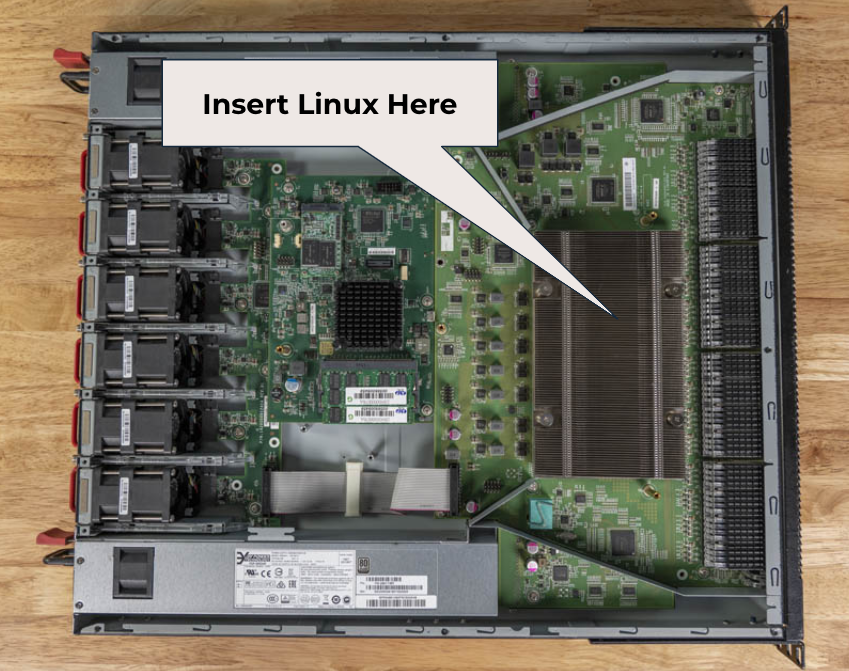

In order to acquire customers, Cumulus entered into a feature parity war with Cisco and Arista. Basically they tried to add all of the features the market asked for. Some of these features were definitely needed, some of them were not. But the challenge was that Cumulus had to get a linux device to do what a Cisco switch could do. And there were a couple of REALLY BIG problems with that, as linux was designed to be a server operating system, it didn’t really care about networking best practices. So all sorts of things weren’t there or didn’t function ideally for networking. Let’s take a look at the big issues and how Cumulus solved these problems:

Cumulus’s Contributions to Open Networking

Switch ASIC acceleration – switchd & SAI

Linux wasn’t originally designed to manage an ethernet switch. There were no daemons or services for programming and managing TCAM or flow tables. Server admins were fine with new flows being programmed in NICs a few seconds after they made a change. But for data center networking? A few seconds is an eternity. So Cumulus created a new daemon called switchd which was responsible for dealing with the hardware and the ASIC. This was a totally new concept for customers at the time. Unfortunately, Cumulus made the strategic decision to keep this particular bit of code proprietary and closed-source. Since switchd is required for Cumulus to run on switching hardware, this resulted in the strange marketing distinction between “Open Networking” and “Open Source”. You see, Cumulus Linux was “Open Networking”, meaning they let you consume this new disaggregated hardware/software model, but they were not completely open source. Switchd wouldn’t function properly without the user entering a license key. Had SAI existed, Cumulus would have had more options to potentially open source switched, but once again, timing is king!

But this was a really cool idea and it was the forerunner to the Switch Abstraction Interface (SAI) that we all now use in SONiC to support multiple hardware platforms.

Layer-3 Routing – Quagga becomes FRR

Linux had always lacked a really good routing stack. At that time there were not many options; static routes, some proprietary and expensive router software, or some weird software package called Quagga. Every single person knows that they had a “WTF” moment when they first heard someone mention “Quagga”. What a bizarro name right? Quagga is a species of zebra that was hunted to extinction in the 19th century. Maybe that should have foreshadowed what happened to it. Anyhow, Quagga (the networking thing, not the emo horse) is a suite of routing protocol daemons including BGP and OSPF. Before Cumulus, Quagga was mostly experimental. It had a lot of bugs and really didn’t scale very well. Lots of BGP nerd knobs were missing. Cumulus began improving Quagga and upstreaming all of their changes to the maintainer, who happened to be a single person using some weird version control methodology. This required a ton of engineering. Determining actual route scaling limitations for FIB & TCAM is tricky if you are the product manager for one device, but across multiple ASICs, CPUs, SerDes, port blocks, and optics?

That’s crazy right? But indeed, Cumulus pushed forward with determination to out engineer and out-automate this complexity. Over time, Quagga performance became quite reliable and has now been shown to scale up to support the ingestion of the entire global routing table. We all know that FRR can handle the internet table, which is really as complex and dirty as you can get. However, due to issues with the speed at which the original maintainer was integrating the changes, Cumulus eventually decided to fork Quagga into a new project called Free Range Routing (FRR). That project is now the most widely deployed and used open source routing suite, running in massive clouds such as Azure and AWS.

Cool Ideas Become Legit

This is actually one of my favorite examples of how Cumulus demonstrated the democratization of networking. BGP Unnumbered. That’s right, did you know that this was not a “big vendor” creation? Cumulus designed, polished, and promoted the implementation of BGP Unnumbered, and then let everyone copy their masterpiece. Copying is the sincerest form of flattery right? Other vendors implemented this simple technique in their NOS practically overnight.

Basically, Cumulus was building linux networking, optimized for the datacenter.

Linux Network Interface Management – ifupdown gets an upgrade

As I’ve said before, linux was not designed to be a switch OS, and it was painfully obvious whenever you tried to make changes to a single port with the native linux networking commands. Basically if you wanted to change a port configuration, or even bounce a port, you would essentially be bouncing all of the ports on the server at once. This is not a big deal for a server when you are doing maintenance, but imagine bouncing an interface on a 48 port switch and all of the ports go down, that’s ridiculous and unacceptable in the datacenter. This was a fairly ingrained technical design in linux and changing it required a HUGE amount of effort. Cumulus eventually released ifupdown2, which they upstreamed so that everyone could get the benefits of their work, and now the new capabilities for port management can be enjoyed by the entire open networking community.

Automation & Programmability – CLIs turn to Ansible

Cumulus did not provide a CLI or API when they first launched. The idea was that CLI was a dead end and nobody should be using CLI for managing a data center fabric switch. So Cumulus took the lead and created the first real Ansible playbooks for automating a network, causing Cisco to do a quick “me too” by getting a third party developer to create Ansible tools for the Nexus 9k. Indeed, the DevOps-first approach was quite forward thinking, and really caused the industry to move faster towards a more programmable model.

That is a LOT of development and technical debt resolution for a startup with little income. Some people already know how this ended up…

In 2020 Nvidia acquired Cumulus Networks, shortly after it purchased Mellanox. At the time, Cumulus supported two major ASIC vendors, Broadcom and Mellanox. Shortly after the deal was announced, Broadcom revoked Cumulus’s access to the Broadcom SDK, effectively killing Cumulus support for Broadcom ASICs. An unofficial estimate was that 90% of Cumulus’s customers used Broadcom ASICs (sold as Dell, Edgecore, etc). The multi-vendor capabilities of Cumulus ended and now Cumulus is a piece of the Nvidia networking portfolio, with specific optimizations for AI/ML workloads and storage networking.

Let’s Hear It For Cumulus!

I like to tell people that Cumulus broke down a wall in the industry by throwing all of their weight into it, basically breaking their neck in the process. What I mean is that Cumulus made incredible forward momentum for the open source and open networking community, but in doing so they exerted too many resources (development costs & time) so they could not effectively differentiate beyond just being a linux NOS. Cumulus started this revolution we’re currently in the middle of, but the market was too slow to adopt the disaggregated model. While they weren’t quite the industry disruptor that everyone wanted, they broke a lot of legacy business models, and many vendors are still struggling to address the new reality.

So without further ado, on behalf of the community, I would like to offer up a HUGE THANK YOU to Cumulus and all the employees who helped to further the state of the art of networking.

How is today different?

Cumulus did not effectively attach their technology to any major transition in the market. One could argue that they tried to create the transition itself, but bash instead of CLI and whitebox T2 instead of Cisco T2 was not enough to create a motivating factor for enterprises to update all of their NOCs and monitoring systems, as well as replace all of the CCIEs with linux experts. It was not enough to create a transition. Instead, they should have been looking for a major transition to attach to.

SONiC has HUGE momentum. It was designed to be automated like a modern application. Hedgehog is working to bring all of the goodness of the Kubernetes platform transition to SONiC networking. This currently includes datacenter network fabrics and edge application hosting platforms, but will one day extend to campus, WAN, and other deployment models. No other open-source NOS has a contributing community as large, and with all of the major vendors now supporting SONiC, it is expected that SONiC will be the standard NOS for the majority of new builds in the near future. The time for SONiC adoption is NOW.

Till next time…

Josh Saul has pioneered open source network solutions for more than 25 years. As an architect, he built core networks for GE, Pfizer and NBC Universal. As an engineer at Cisco, Josh advised customers in the Fortune 100 financial sector and evangelized new technologies to customers. More recently, Josh led marketing and product teams at VMware (acquired by Broadcom), Cumulus Networks (acquired by Nvidia), and Apstra (acquired by Juniper). Josh lives in New York City with his two children and is an avid SCUBA diver.